“Eloquence is a painting of the thoughts.” - Blaise Pascal, inventor of the calculator

This morning I opened a can of soup. A benign innovation now … soup in a can. I imagine the inventor dressed in a black turtle-neck giving a PowerPoint presentation in a packed auditorium. “This invention will change the way we consume food…forever.” For the next few weeks, his face was plastered on the front page of every newspaper. He was made Times - Man of the Year. Decades later…no one thinks about it. The mere thought of canned goods preserving food over a long period being instrumental in humanity's ability to produce value without worrying about hunger…doesn’t even register. Perhaps the role of a great invention is to fade into the background. So integral to all aspects of daily life that it is never noticed. This is why I think that ChatGPT is just an overhyped calculator.

The revolutionary inventor of canned-goods…I think

AI is a shock to the system. As we witness the technology go mainstream, the diversity of opinions is more entertaining than informative. Some of us believe that AI is the beginning of the end for the human race, while others believe that this is the beginning of the beginning. As significant as the transition from Hunter-Gatherers to an agricultural civilization. The concept of agriculture introduced new concepts such as economics because now each person can trade amongst their neighbors. Governments had to be introduced to give a set of rules for large groups of people to follow. Instead of men spending their time hunting for their food, the concept of agriculture allowed them to raise sheep, cows, and chickens. Men then had time to think and could invent things to solve problems. The concept of agriculture brought us through to the Industrial Revolution, the internet, and now…the age of artificial intelligence. Soon, AI will be so pervasive and significant that in a few generations, it will be nothing more than an overhyped calculator. But how does it work?

What’s next in human evolution? Do we become the machine?

There are various AI models based on the unique application. For example, a self-driving vehicle wouldn’t use the same model as ChatGPT to drive a car. The category of models that I will focus on today is called a large language model. Artificial intelligence researchers developed these large language models to understand the natural language from text and human speech. They are used in the generation of text and images through the interface of text prompts. These language models allow humans to directly communicate with the machine without writing a programming language. Language models are trained on literature, images, speech, handwriting, and more. They find patterns in these items and are then trained to associate meaning through a confidence score. The confidence score is a statistical analysis of how close the item is to the actual meaning.

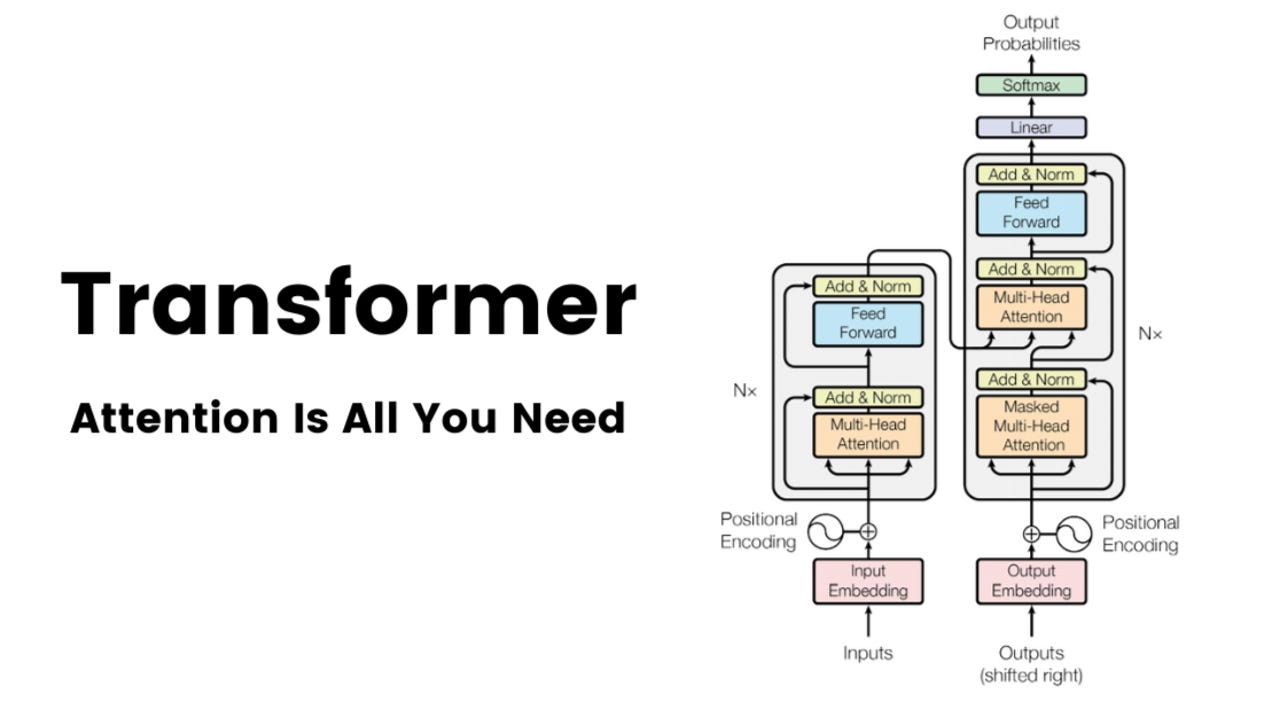

Artificial intelligence is an industry driven by “Research Papers”. The papers are held in high regard. Each paper is debated over by the best minds in the world. “Attention Is All You Need” research paper introduced the concept of a Transformer neural network architecture. I’m just learning how it all works, so forgive me if I make any errors in the following explanation. The transformer is the underlying technology of the language model. Transformers utilize sequence-to-sequence modeling which processes the entire sequence of text all at once as opposed to piece by piece. The sequence of text encoded is stacked on top of each other to allow the neural network to process the stack multiple times. This allows the transformer to discover the NLP pipeline. The layer identifies speech tags followed by constituency dependencies, semantic roles, and co-references. The encoder layer includes a self-attention layer that processes the input into the feed for the neural network. The decoder has the same blocks as the encoder, plus an encoder-decoder attention layer. Google uses an unsupervised model called sentence-piece to encode and decode at this point in the process, while OpenAI uses tiktoken with 50,000 tokens to encode. This process produces a vector that comprehends the sentence, which goes into the self-attention layer to produce an importance score for the word in the sentence. The attention layer focuses on the subject and any pronoun that refers to the subject. Self Attention produces importance scores between words of the same sequence. After the decoder process, there is a softmax layer that assigns a probability score to each word.

Ms. Coffeebean is an amazing resource to learn about AI concepts.

Overall, I’ve been really interested in how AI works. I recently went to a Data Practitioners meetup in San Francisco to network with some AI folks. I started talking to a former tech lead who worked at Apple. I expressed my interest to get into AI, and he gave me some advice. He recommended that instead of going 0 to Large Language Models, he encouraged me to start with smaller models. First learn linear regression, classification, clustering, predictive and statistics models. I invited him to the podcast, so hopefully he accepts my request. I learn fast when I write articles about what I’m learning, so I will keep doing that. Check out the latest podcast with my favorite NFT project, $loot. This time with community member

.Subscribe to Medallion XLN as we are building the next generation of technology using XR, Blockchain, AI, and the power of decentralization to reclaim our digital sovereignty.

we are building the next generation of technology using XR, Blockchain, AI, and the power of decentralization to reclaim our digital sovereignty: how?